Kubernetes Node Shutdown Results in New Node Startup

Kubernetes Node Shutdown Results in New Node Startup

Kubernetes Node Shutdown Results in New Node Startup

Kubernetes Node Shutdown Results in New Node Startup

A resilient Kubernetes cluster can cope with a crashing node and simply starts a new one.

Motivation

A changing number of nodes in your Kubernetes cluster is expected, as you may update your nodes from time to time or simply scale the cluster depending on traffic peaks. This is especially true when using spot instances in a Cloud environment. This requires the deployments to be node-independent and properly configured to be rescheduled on a newly started node or a node that still has free resources.

Structure

Before restarting a node, we verify that the cluster is healthy and that the deployments are ready. Afterward, we trigger the shutdown of the node of a specific Kubernetes deployment and expect the deployment to be rescheduled on any other node and a new node to start up within a reasonable amount of time.

Solution Sketch

Warning

Please be aware that we will shut down a node. Please ensure this is fine and your node is either virtual or can somehow be started up afterward.

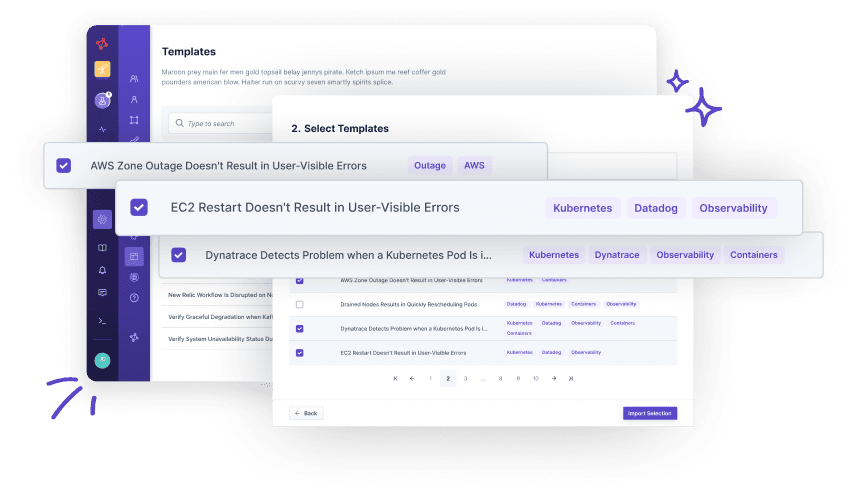

How to use this template?

Import via Hub Connection

Steadybit’s Reliability Hub is already connected to your platform. If you are an admin, you can just easily import templates with just one click.

Are you on-prem?

This is how you import Templates