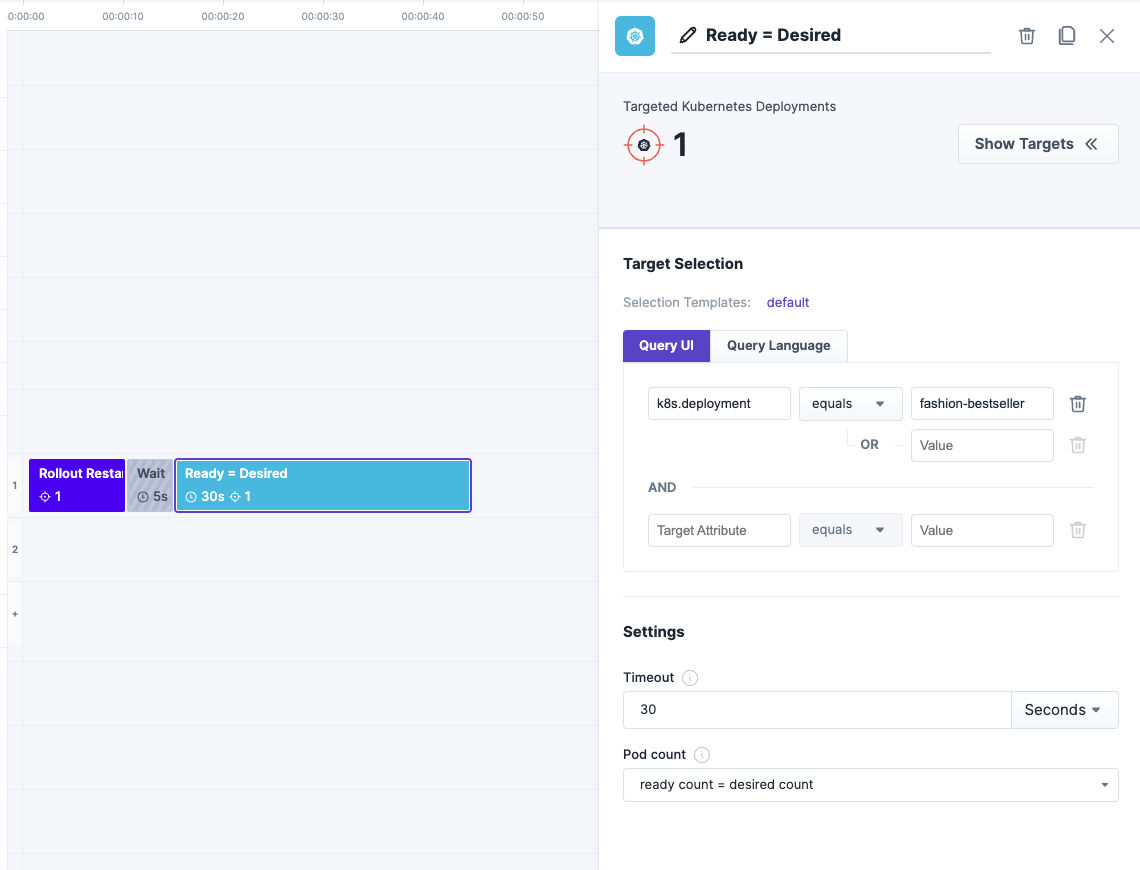

Deployment Pod Count

Verifies Kubernetes Deployment pod counts

Targets:

Kubernetes Deployments

Deployment Pod Count

Verifies Kubernetes Deployment pod countsTargets:

Kubernetes Deployments

Install nowDeployment Pod Count

Verifies Kubernetes Deployment pod counts

Targets:

Kubernetes Deployments

Deployment Pod Count

Verifies Kubernetes Deployment pod countsTargets:

Kubernetes Deployments

Install now