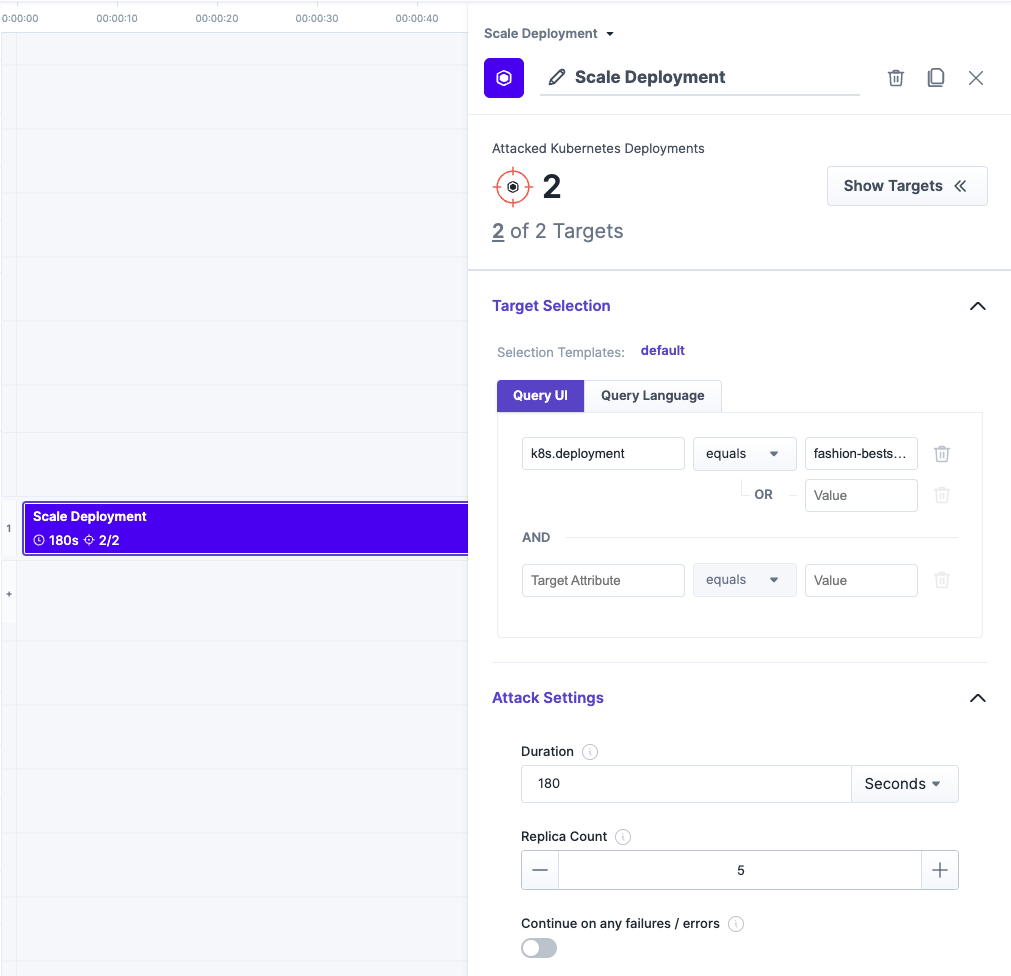

Scale Deployment

Up-/Downscale a Kubernetes Deployment

Targets:

Kubernetes Deployments

Scale Deployment

Up-/Downscale a Kubernetes DeploymentTargets:

Kubernetes Deployments

Install nowScale Deployment

Up-/Downscale a Kubernetes Deployment

Targets:

Kubernetes Deployments

Scale Deployment

Up-/Downscale a Kubernetes DeploymentTargets:

Kubernetes Deployments

Install now